Award-winning PDF software

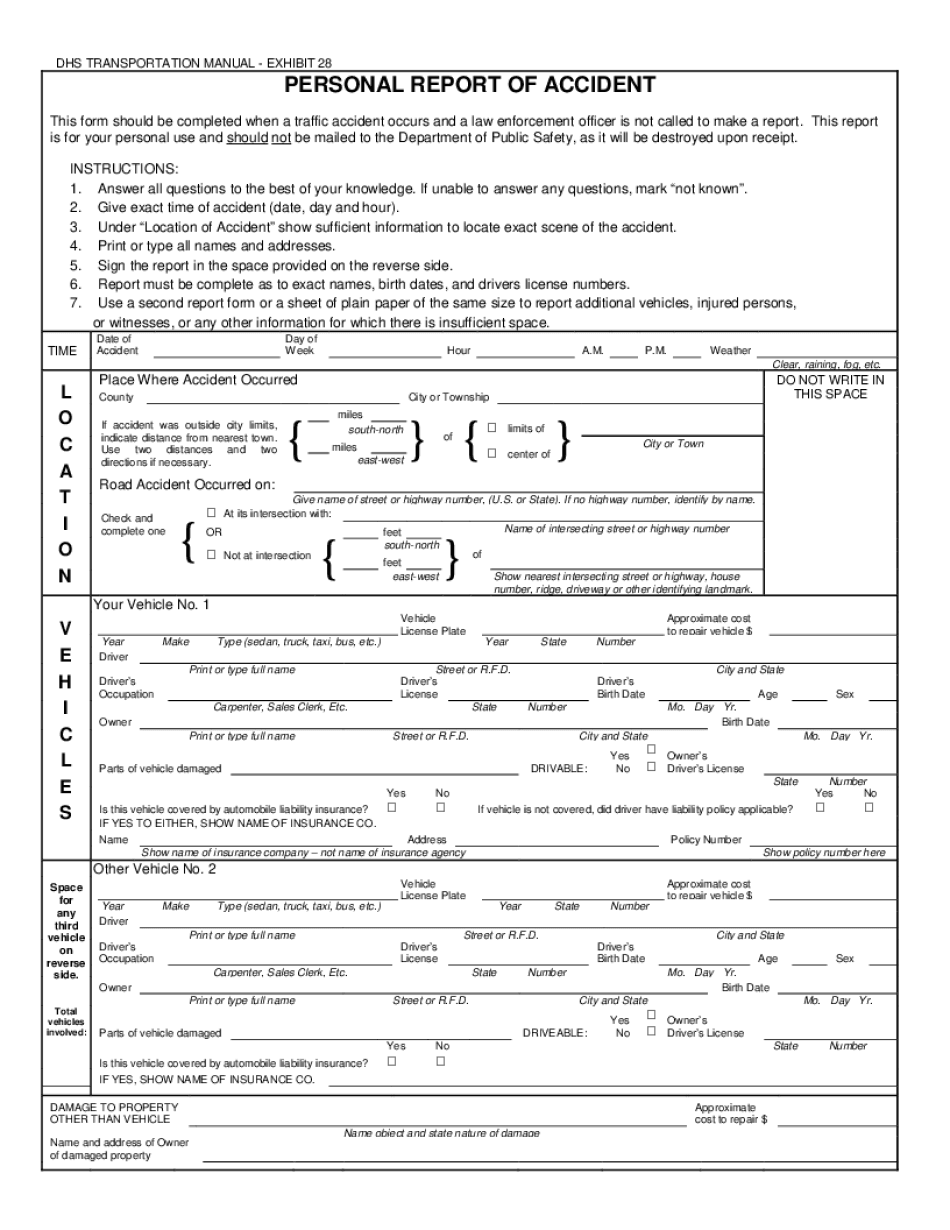

Wisconsin driver report of accident Form: What You Should Know

If you own a car, a boat, etc., if you are a Wisconsin resident, you may be a licensed Wisconsin driver if you have a valid, unexpired vehicle registration. The form asks for your license number, manufacturer, year and make.

Online solutions help you to manage your record administration along with raise the efficiency of the workflows. Stick to the fast guide to do Georgia Sr 13 , steer clear of blunders along with furnish it in a timely manner:

How to complete any Georgia Sr 13 online: - On the site with all the document, click on Begin immediately along with complete for the editor.

- Use your indications to submit established track record areas.

- Add your own info and speak to data.

- Make sure that you enter correct details and numbers throughout suitable areas.

- Very carefully confirm the content of the form as well as grammar along with punctuational.

- Navigate to Support area when you have questions or perhaps handle our assistance team.

- Place an electronic digital unique in your Georgia Sr 13 by using Sign Device.

- After the form is fully gone, media Completed.

- Deliver the particular prepared document by way of electronic mail or facsimile, art print it out or perhaps reduce the gadget.

PDF editor permits you to help make changes to your Georgia Sr 13 from the internet connected gadget, personalize it based on your requirements, indicator this in electronic format and also disperse differently.